This is my blog.

好冷啊

想要窝起来

Cross validation(CV)

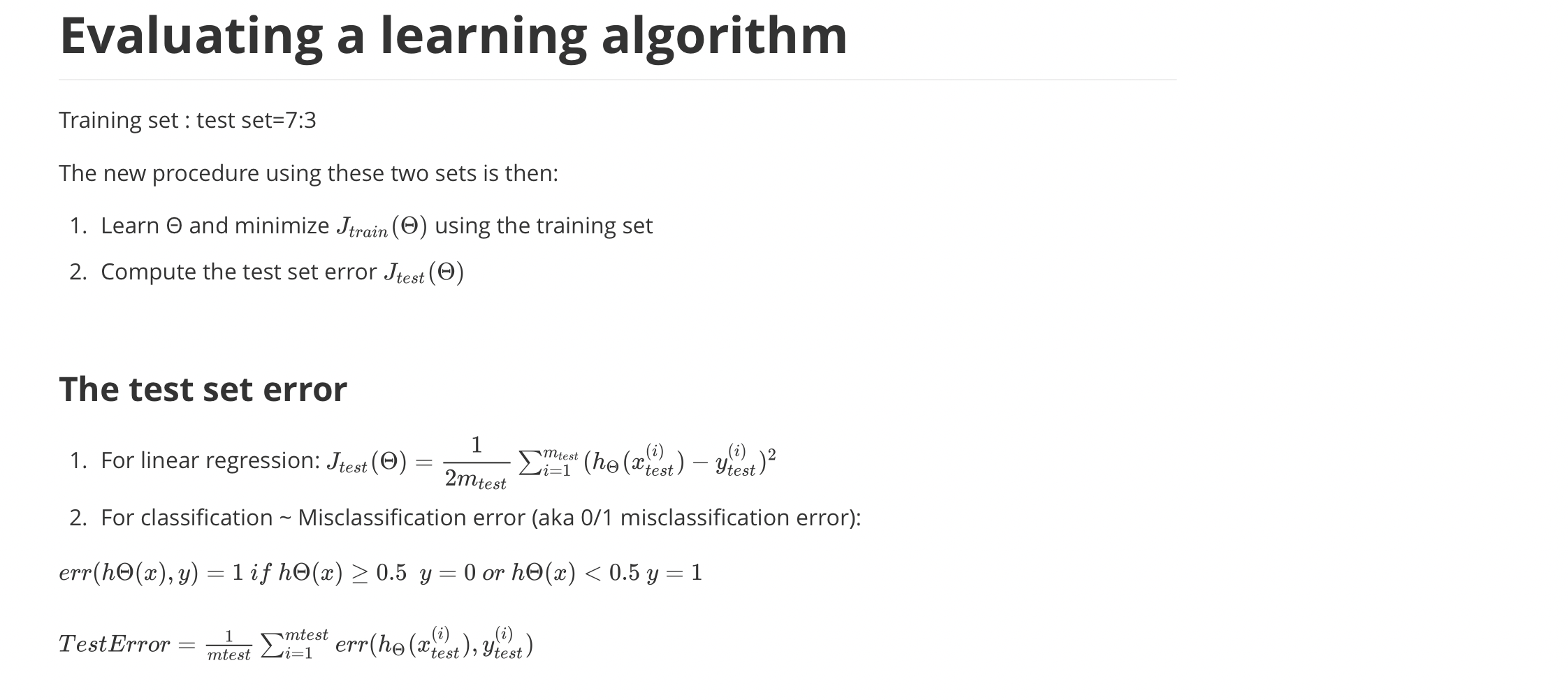

In order to choose the model of your hypothesis, you can test each degree of polynomial and look at the error result.

One way to break down our dataset into the three sets is:

- Training set: 60%

- Cross validation set: 20%

Test set: 20%

calculate three separate error values for the three different sets using the following method:

- Optimize the parameters in Θ using the training set for each polynomial degree.

- Find the polynomial degree d with the least error using the cross validation set.

- Estimate the generalization error using the test set with$ J_{test}(\Theta^{(d)})$, (d = theta from polynomial with lower error);

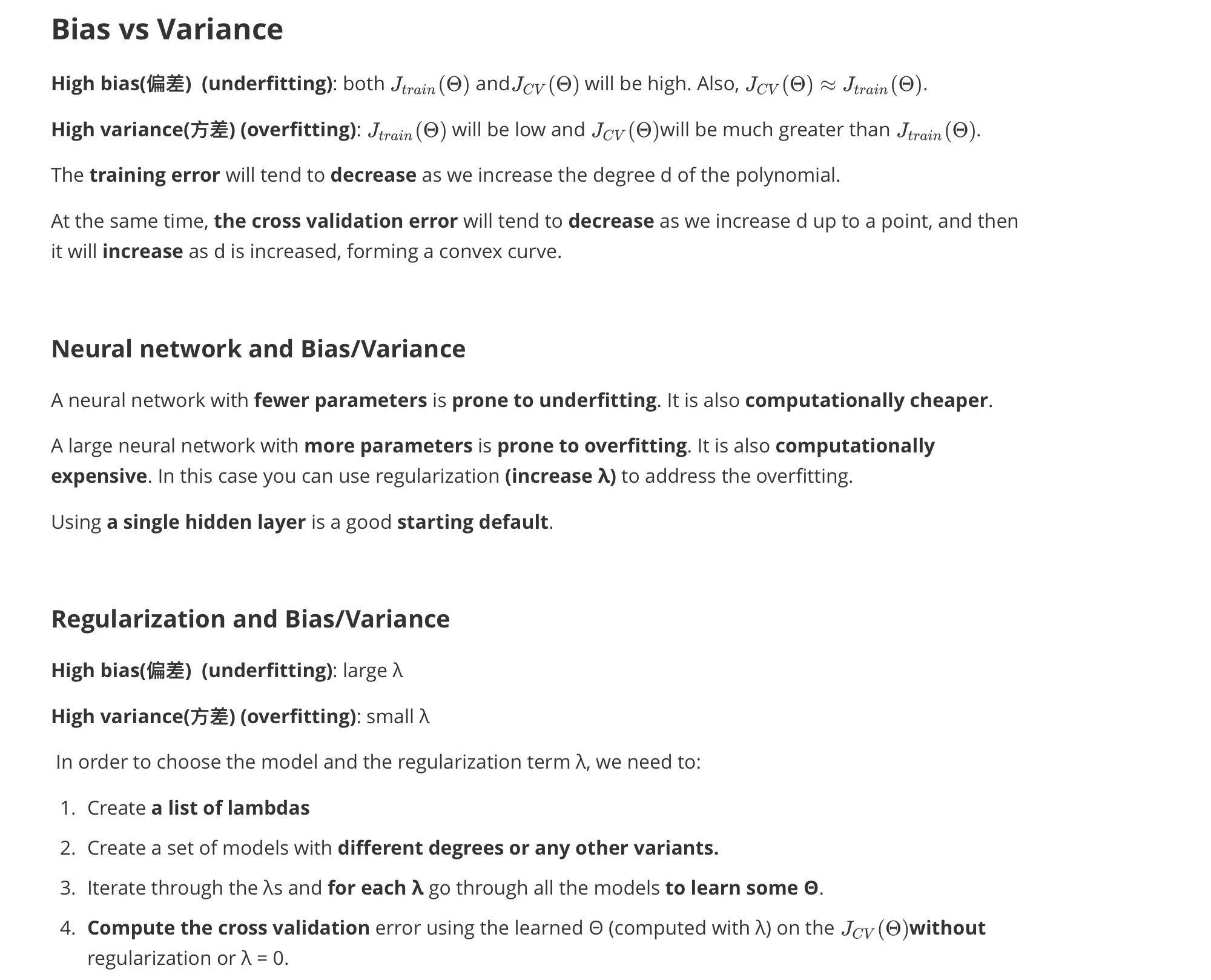

Getting more training examples (high variance)

适用在算法复杂,特征值少的(和隐藏层单元数无关)

使用大量的训练数据优化模型的性能,其要点包括:1、模型必须足够复杂,可以表示复杂函数,以至于数据大了之后,模型不会因为无法表示复杂函数而欠拟合;2、数据的有效性,数据本身有一定规律可循。

没有用正则化的模型大部分是underfiting,high bias不必有大数据训练。

Trying smaller sets of features (high variance)

Trying additional features (High bias)

Trying polynomial features (High bias)

Increasing λ (High bias)

decreasing λ (high variance)

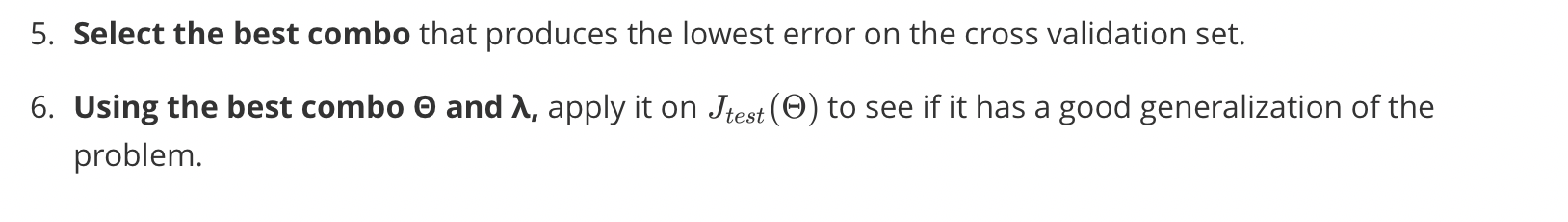

Solve machine learning problem

- Start with a simple algorithm, implement it quickly, and test it early on your cross validation data.

- Plot learning curves to decide if more data, more features, etc. are likely to help.

- Manually examine the errors on examples in the cross validation set and try to spot a trend where most of the errors were made.

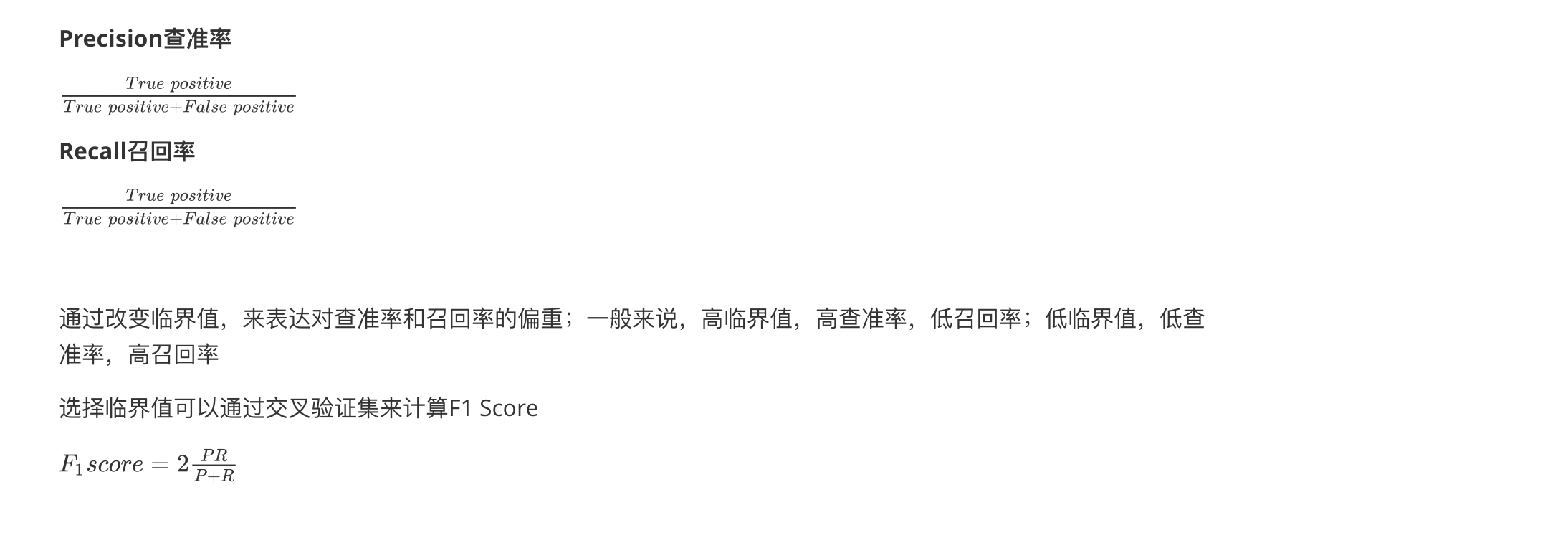

Skewed problem

偏斜类问题(正例和反例的个数相差太多)

| Actual | |||

|---|---|---|---|

| Predict | 1 | 0 | |

| 1 | True positive | False positive | |

| 0 | False negative | True negative |

后记

感觉这章用途还是很大哒

突然不知道这个后记说什么了

幸好 还理我 姑且认为还有希望吧

那就 晚安吧!

转载请注明出处,谢谢。

愿 我是你的小太阳