This is my blog.

这一章,大二的时候就去听过了演讲吧

大概就是做个卷积 什么池来着

然后弄了个手写识别

然后被嘲笑了

是的(抄代码的时候抄错了,然后被说你个搞ACM的怎么这错误都会犯

我……emmmm……不和你们说话

就是一个很神奇的东西吧!

也感觉很有用!

我感觉我可能要看两遍这个,有点没懂(逃

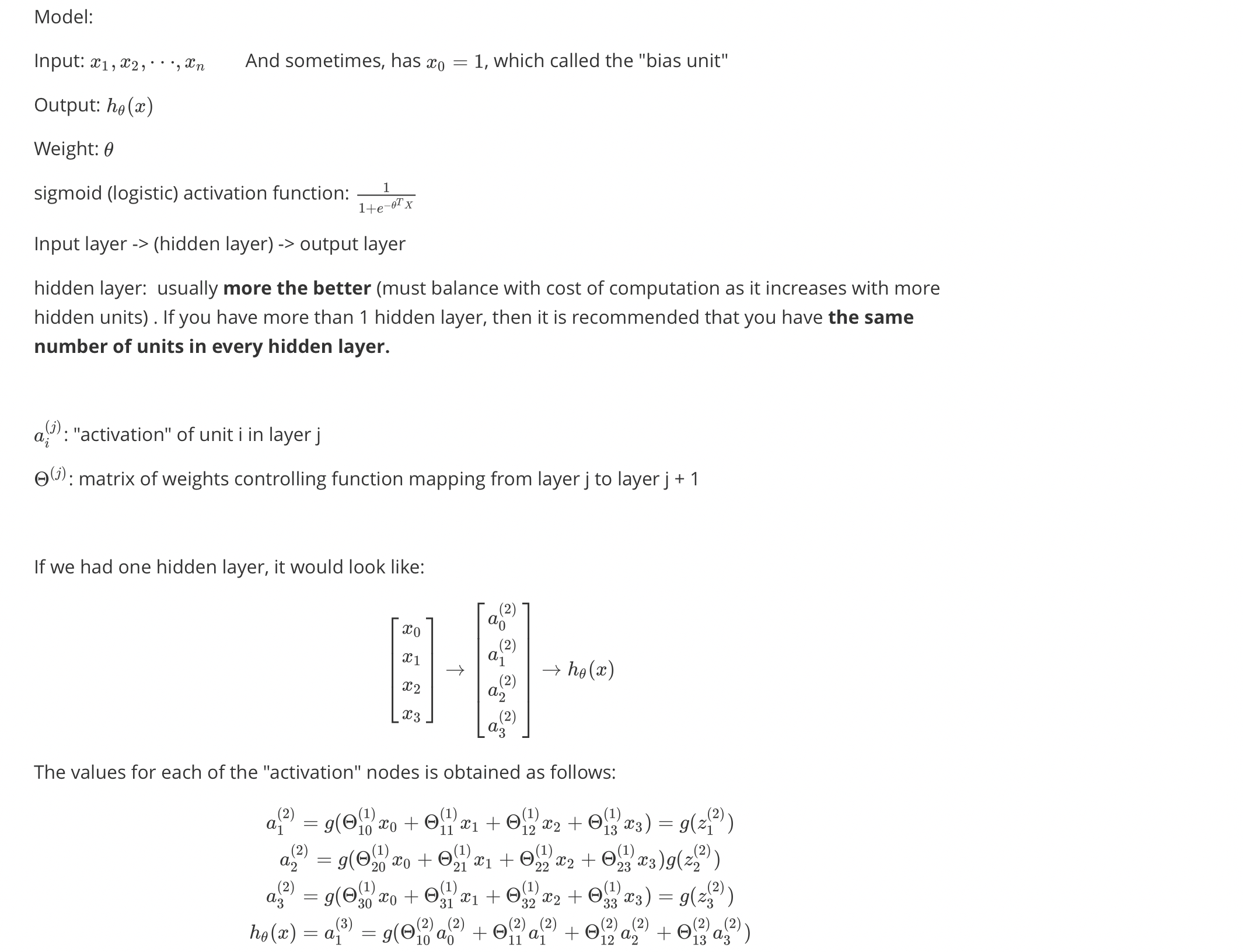

Lesson 8 Neural Networks

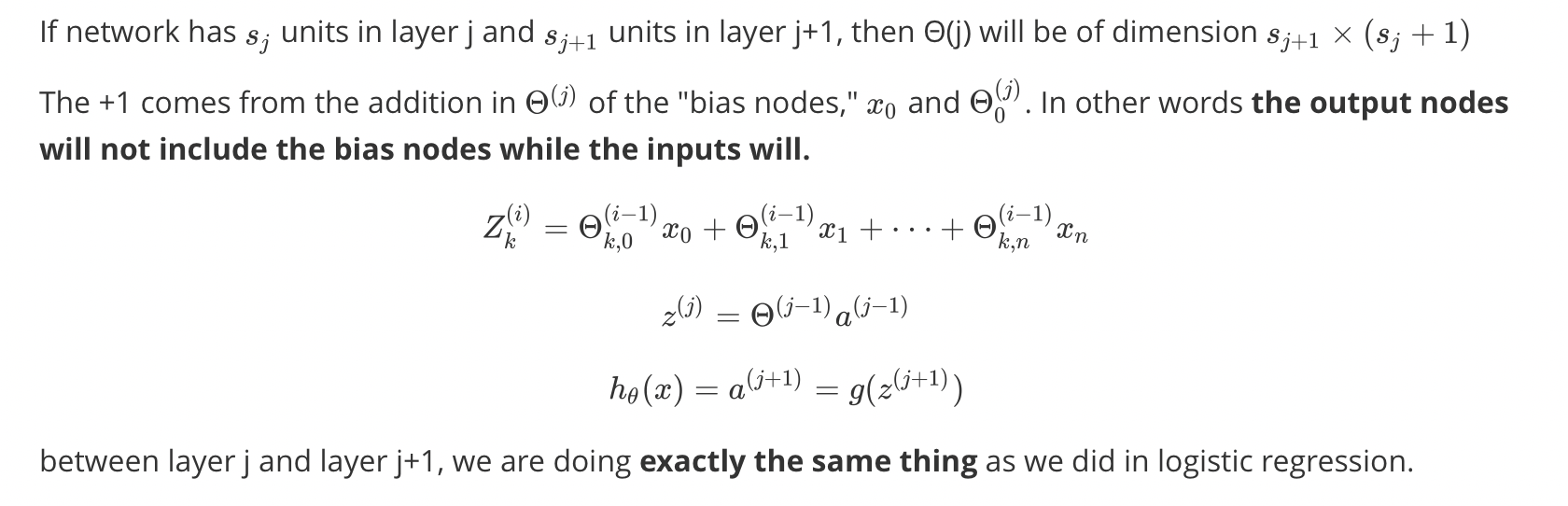

Training a Neural Network

Lesson 9 Cost function and backpropagation

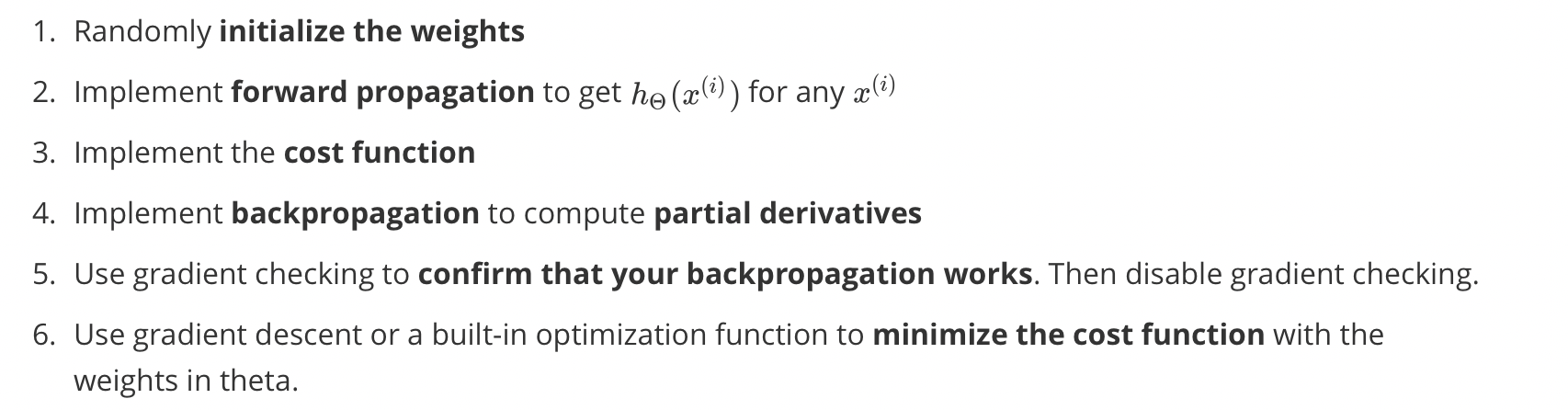

Cost function

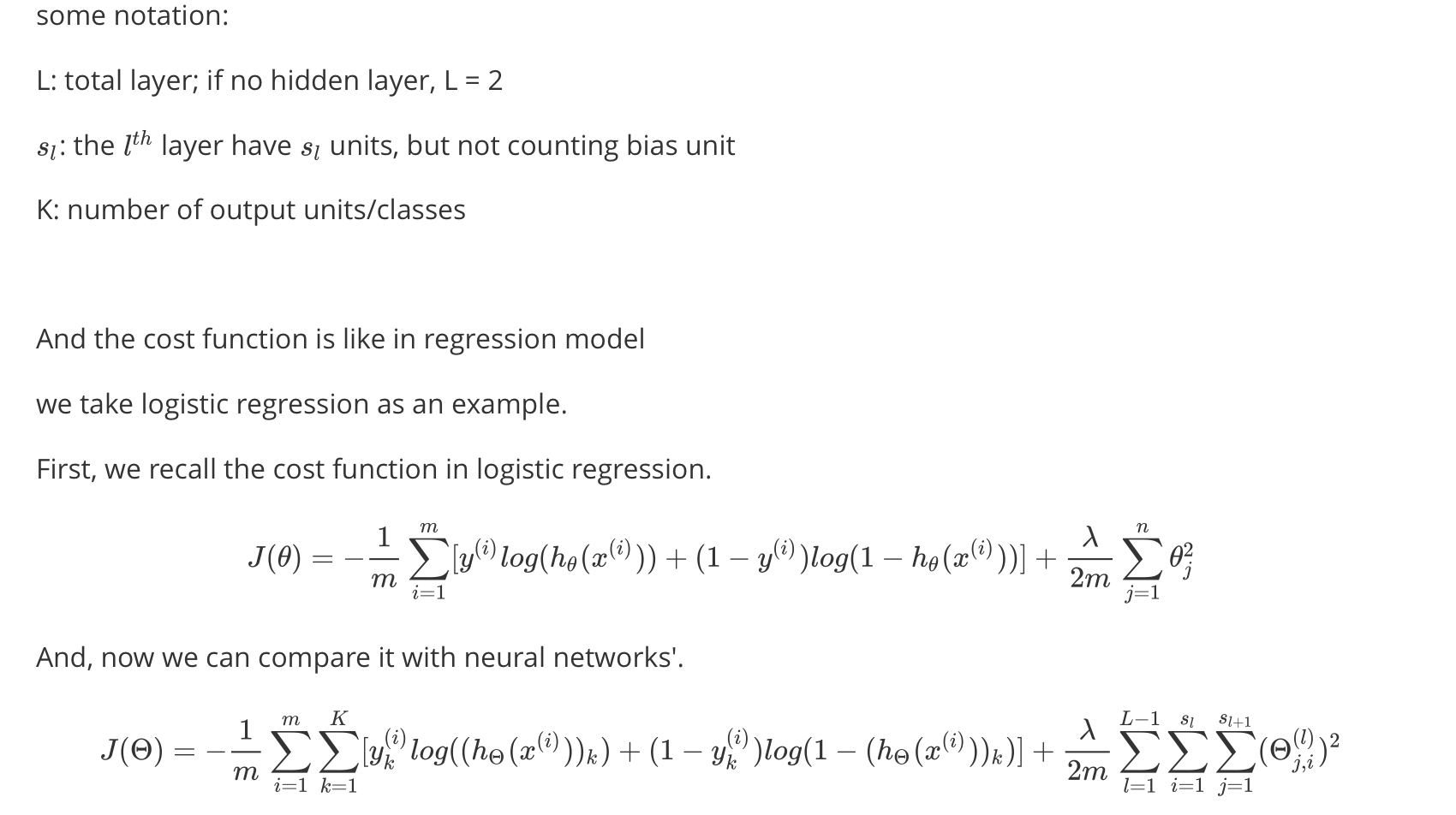

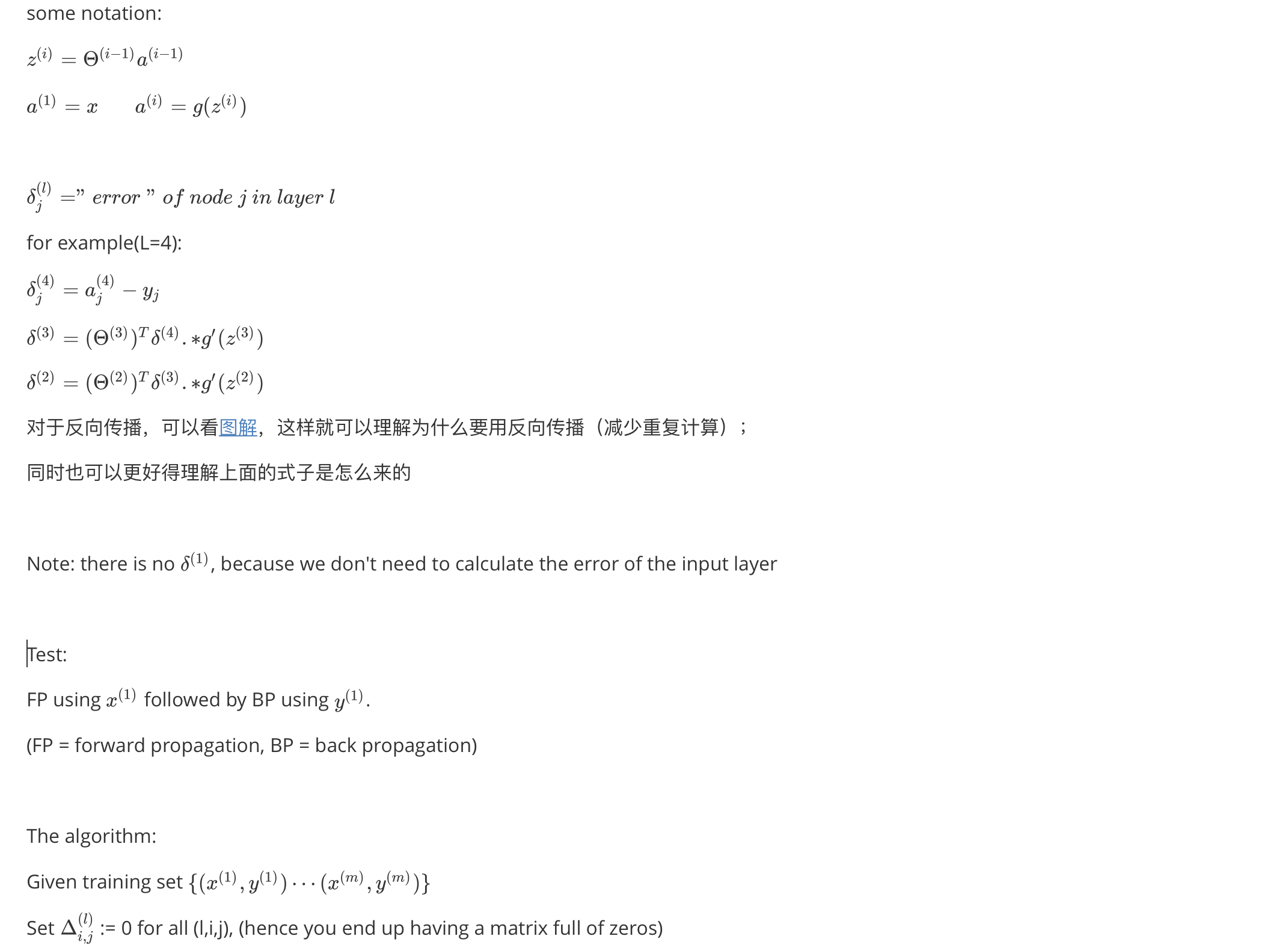

backpropagation algorithm

|

|

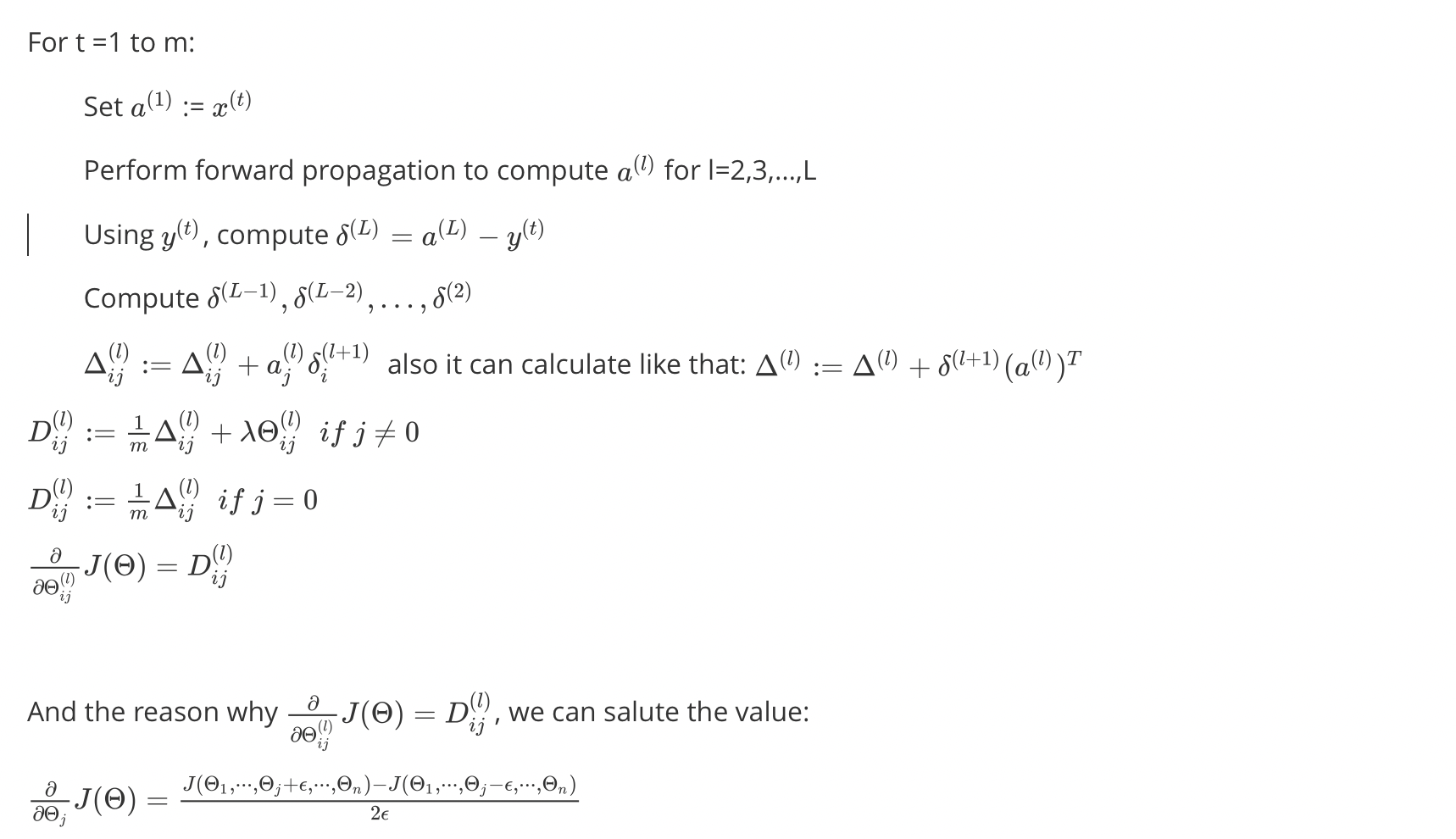

And you will find gradApprox ≈ deltaVector.

Once you have verified once that your backpropagation algorithm is correct, you don’t need to compute gradApprox again. The code to compute gradApprox can be very slow.

In Octave

In order to use optimizing functions such as “fminunc()”, we will want to “unroll” all the elements and put them into one long vector:

|

|

If the dimensions of Theta1 is 10x11, Theta2 is 10x11 and Theta3 is 1x11, then we can get back our original matrices from the “unrolled” versions as follows:

|

|

and then we can use fminunc

|

|

Random initial

Initializing all theta weights to zero does not work with neural networks(It does work in liner). When we backpropagate, all nodes will update to the same value repeatedly.

因为如果神经网络计算出来的输出值都一个样,那么反向传播算法计算出来的梯度值一样,并且参数更新值也一样(Theta=Theta−α∗dTheta)。更一般地说,如果权重初始化为同一个值,网络就不可能不对称(即是对称的)。

|

|

后记

嘿嘿嘿

今天看到了好多小哥哥 小姐姐

羡慕小姐姐的美貌

还有会跳舞的

拿到了好多吃的(我绝对没有去要,都是自愿给我的

嘿嘿嘿

转载请注明出处,谢谢。

愿 我是你的小太阳