This is my blog.

新的一门课开始啦~

感觉还不错啦~

新的一门课,找首新歌吧~

Suggestion

- 多读文献

- 编程

- 培养自己的直觉,并且相信自己的直觉

What is Neural Nerworks

激活函数

具体可见之间文章手写数字识别

每层的激活函数可以不同

线性整流函数(Rectified linear unit) RELU

但是在负数的时候,导数为0;因此出现了leaky Relu,eg.max(0.01z,z), the 0.01 can change,但后者不常使用

max(0,y)

达到数据中心化的效果

一般来说,效果比sigmoid更好

激活函数一般不用线性的,或者不用不用(就是$g(z)=z$)

这样会使隐藏层没有存在的意义,也就是说神经网络和没有学习能力一样,只存在着线性的惯性,多步可以合成一个大点的线性关系;

线性激活函数一般用在输出层,用来预测房价类型的问题

Structured Data

给出结构化数据,每一个特征都有清晰的定义

每一个特征都有明确的数值

Unstructured Data

非结构的,例如音频,图片,文字

The iterative process of developing DL systems: Idea->Code->Experiment->Idea->….

NN

之后的博文会更新,更新完后,会在此加上链接

- Standard NN

- CNN

- RNN

Logistics Regression as a neural network

It is a Binary classification (二分类问题)

这里只有两层(输入层不算)

在Regression Model-Lesson6提到过一些概念,现大致重述如下:

optimization

vectorization

在python的numpy包中有许多内置函数,可以进行并行运算,比for循环更快

You can get time running time of one programm is to use time.time() method in package time. 单位10^{-6}s

一些内置函数

|

|

给一下测试的结果

|

|

在python中,若是一个向量加上一个常数,那么就等同于一个向量加上一个同样大小的向量,并且这个向量的值都是这个常数,这叫作broadcasting

因此现在我们可以将方法二的进行向量化

|

|

接下来,我们来了解一下python中的broadcasting

当array的时候,(m,n) 要[+,-,*,/] (1,n)或者(m,1)的向量的时候,后者会扩展为(m,n)的向量,再进行运算

注意当用array创建的时候,用np.random.randn(5,1)而不是np.random.randn(5),前者创建的是矩阵,后者创建的是秩为1的数组;后者是形如[[1, 2, 3]]前者是[1, 2, 3]

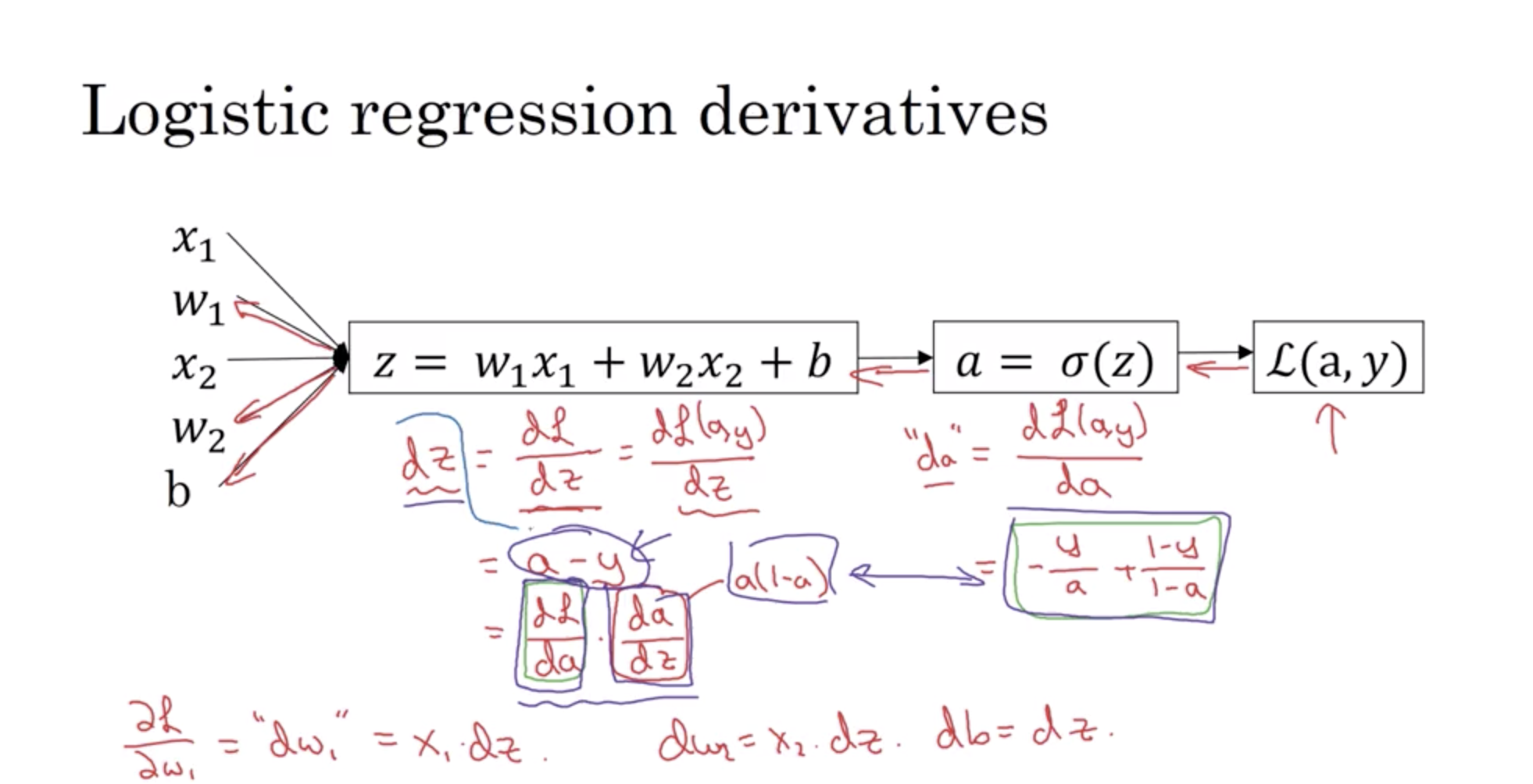

Computation graph

正向流程图,forward to compute the cost function

One step of backward propagation on a computation graph yields derivative of final output variable.后向计算导数,优化代价函数

链式法则:感觉有点像蝴蝶效应(我瞎说的,就是一种影响的传播

python

建议完成课程后的作业,感觉代码很棒呢!

|

|

Standard NN(标准神经网络)

The general methodology to build a Neural Network is to:

- Define the neural network structure ( # of input units, # of hidden units, etc).

- Initialize the model’s parameters

- Loop:

- Implement forward propagation

- Compute loss

- Implement backward propagation to get the gradients

- Update parameters (gradient descent)

逻辑回归模型中,可以初始化为0,因为:

Logistic Regression doesn’t have a hidden layer. If you initialize the weights to zeros, the first example x fed in the logistic regression will output zero but the derivatives of the Logistic Regression depend on the input x (because there’s no hidden layer) which is not zero. So at the second iteration, the weights values follow x’s distribution and are different from each other if x is not a constant vector.

但是在神经网络中不可以,因为这样隐藏单元会一直是同样的功能,即隐藏神经元是对称的

由w影响的的,所以b可以初始化为0

而w则需使用np.random.randn(),同时,将这个生成的数,乘以一个较小的值,如0.01,这样通过计算得到的z绝对值较小,在激活函数中的斜率比较大,学习速率就可以大些。

同时每一层乘的数最好不相同。

在编程的时候,对于每一个变量最好写上shape

第一层网络一般被认为是特征检测器

the “cache” records values from the forward propagation units and sends it to the backward propagation units because it is needed to compute the chain rule derivatives.

Hyperparameters 超参数

都会影响W和b

- learning rate

- Iteration

- Hidden layers

- Hidden units

- choice of activation function

在其他神经网络中我们还会遇到的超参数:

- Momentum term

- Mini batch size

- Regularizations Parameters

- ……

注:The deeper layers of a neural network are typically computing more complex features of the input than the earlier layers.

we cannot avoid a for loop iterating over the layers

To compute the function using a shallow network circuit, you will need a large network (where we measure size by the number of logic gates in the network), but to compute it using a deep network circuit, you need only an exponentially smaller network.

CNN(卷积Convolutional神经网络)

在图像处理中,我们总是将卷积结构放在神经网络中,我们称这种处理方式为CNN

RNN(递归Recurrent神经网络)

序列的数据,我们一般用RNN;例如音频,语言

后记

逻辑回归神经网络,刚开始看machine learning这个课程的时候,

含含糊糊,到写了机器学习的作业后,开始推导公式,

一步一步理解,

到现在再来一遍的时候,

感觉舒服多了

原来这门课只有四周很开心呐~

所以,我的零食什么时候到呀!

哦,对!我的解释器,emmm……

转载请注明出处,谢谢。

愿 我是你的小太阳